If you are a software engineer: DON'T PANIC! This blog is my place to beam thoughts on the universe of Artificial Intelligence and Software Architecture right to your screen. On my infinite mission to boldly go where (almost) no one has gone before I will provide in-depth coverage of architectural and AI topics, personal opinions, humor, philosophical discussions, interesting news and technology evaluations. (c) Prof. Dr. Michael Stal

Wednesday, December 03, 2008

Panic mode

If you look at software development projects, you'll often find the same issues. As soon as deadlines are getting delayed or dropped, participants are increasingly starting to get nervous. After a while the whole organization is typically entering panic mode. There is a possible alternative flow which I call Lemmings mode. This is when people refuse to recognize they are facing some severe problems and continue the project without any countermeasures which denotes a typical human behavior.

As an alien you'll recognize panic mode in an existing project by encountering different indicators. For example, if meeting frequency is high, and architects and developers spend more time for meetings than for productive work, this might either be a clue for developer's hell or for a project in panic mode. The same holds for situations where the organization is constantly generating new or enforcing uncontrolled and unplanned activities (actionism) in a higher rate than they can be processed. If you interview people in such a project context, you'll receive inconsistent and contradicting statements, because there is no synchronization or coordination in place.

Although it is obvious that panic mode worsens the situation and even leads to conflicts among the persons involved, projects fall into this trap pretty often. I believe, this is caused by the psychological fact that all participants in such a project are caught in the trap. In these situations, I generally recommend to involve an external person who is not part of the problem (aka as project) and acts more as an external observer and mediator. In addition, this mediator needs a peer in the organization's senior management able to enforce all recommendations (if accepted), because otherwise all suggestions would be ignored. To be honest, architecture reviews or other forms of assessments work exactly that way.

A way to address problems very early in projects is to use an agile approach, especially to elaborate what risks exist and how to address them systematically. In contrast to common believe such risks are non constrained to architecture and technology but also extend to politics, partner and vendor relationships, organization, quality, development processes. Risks analysis is not just about recognizing the risks but also about strategies and tactics for addressing them.

By the way, this is exactly what currently happens in the economic crisis. Since market participants don't seem to be able to address the challenges themselves, politicians and central banks are forced to bring countermeasures in place. All the risks in the market have been subject to constant ignorance.

The later risks are detected, the more likely the project will enter panic mode. This is why I always recommend regular flash reviews of architecture, code, test strategy, development process (for example, at the end of an iteration). They represent means for detecting problems very early and for getting rid of them (for example, by refactoring).

To cite Douglas Adams: DON'T PANIC!

Friday, November 07, 2008

Why the waterfall model does not work

Again and again I have met managers and software engineers who tried to convince me that agile processes while being appropriate for somebody else's project were simply not applicable to their problem contexts for several reasons. They came up with rather lame reasons like being used to waterfall models or the claim that agile processes do only work for small teams. Especially I am enjoying people's reaction when I am talking about really huge projects I had been involved and which followed the Scrum method.

From my viewpoint the question shouldn't be whether to use agile development processes instead of a waterfall approach. I would rather suggest to let all those waterfall addicted prove why they consider waterfall approaches more suitable in terms of a concrete project.

It is true that an agile method is not superior in planning projects. Even in an agile context you will need to estimate resources, budgets, or time-frames in advance. But in contrast to a waterfall approach you get an early feedback if your initial planing proves to be plain wrong: If after the third iteration you got an substantial delay you are able to react with appropriate countermeasures. In a waterfall model the old saying is that while 80% of the project have been completed, you still need to address the remaining 80%. You may be doomed to fail at the beginning of the project but a waterfall model lets you live with this illusion till the sad end. An agile method on the other hand just provides a whole range of safety nets. You may fall but contact with ground usually is much smoother.

Let me take it the other way round. What are the preconditions to make a waterfall model succeed?

- All requirements are available at project start and will remain unchanged.

- All of the requirements are consistent and complete.

- No errors will be introduced in any phase of software development.

- Likewise, all tools, legacy components, infrastructure parts fully adhere to their specifications and, of course, are consistent and complete themselves.

- All stakeholders start with a full understanding of the problem domain. Developers, testers, architects also have a full understanding of the solution domain.

- There will be no misunderstandings in any communication between stakeholders.

Obviously, most of these preconditions never hold in any real-life project. We have to cope with error and failure. In agile approaches, the principle of piecemeal growth helps to master complexity. Safety nets such as test-driven development or reviews enable quick detection of potential problems. Means like refactoring support us in addressing the problems we detect and to embrace change. Agile communication and customer participation help in keeping all stakeholders synchronized.

Two of the main principles of agile engineering might be called "embrace change" and "learning from failure".

And if we look at nature, evolution basically also represents an agile approach. For all creationists: evolution does not necessarily rule out that there could be a master plan behind everything.

What's your take on the agile versus waterfall dispute?

Friday, October 24, 2008

Integration

Integration represents one of the difficult issues when creating a software architecture. You need to integrate your software in an existing environment and you typically also integrate external components into your software system. Last but not least you often even integrate different home-built parts to form a consistent whole. Thus, integration basically means to plug two or more pieces together where the pieces were built independently and the activity of plugging requires some previous efforts to make the pieces fit together. The provided interface of component A must conform to the required interface of component B. If these components run remotely, interoperability and integration are close neighbors.

For complex or large systems, especially those distributed across different network nodes, integration becomes one of the core issues.

Integration necessities of external components or environments should be treated as high-priority requirements, while internal integration often is caused by the top-down architecture creation process. Examples for external component integration comprise using a specific UI control, running on a specific operating system, requiring a concrete database, or prescribing the usage of a specific SOA service. Internal integration comes into play when an architect partitions the software systems into different subsystems that eventually need to be integrated with each other.

Unfortunately, integration is a multi-dimensional problem. It is necessary to integrate services, but also to integrate all the heterogeneous document and data formats. It is required to integrate vertically such as connecting the application layer with the enterprise layer and the enterprise layer with the EIS layer. Likewise, we need to integrate horizontally such as connecting different application silos using middleware stacks such as SOAP. In addition, shallow integration is not always the best solution. What is shallow integration in this context? Suppose, you have developed a graph structure such as a directory tree. Should you rather create one fat integration interface with complex navigation functionality or better provide many simple integration interfaces for all nodes in the tree?

What about UI integration where you need to embed a control into a layout?

What about process integration where you integrate different workflows such as a clinical workflow that combines HIS functionality (Hospital Information System) with RIS functionality (Radiology Information System)? Or workflows that combine machines with humans?

What if you have built a management infrastructure for a large scale system and now try to integrate an addition component that wasn't built with this management infrastructure in mind?

Think about data integration where multiple components or applications need to access the same data such as accessing a common RDBMS. How should you structure the data to meet their requirements? And how should you combine data from different sources to glue data pieces to a complete transfer object?

Semantic integration is another dimension where semantic information is used to drive the integration such as automatically generating adapter interfaces for matching the service consumer with the service provider.

And finally, what about all these NFRs (non-functional requirements)? Suppose, you have built a totally secure system and now need to connect with an external component?

It is needless to say that integration is not as simple as it often appears in the beginning. Even worse, integration is often not addressed with the necessary intensity early in the project. Late integration might require refactoring activities and sometimes even complete reengineering if there are no built-in means for supporting "deferred" integration - think of plug-in-architectures as a good example for this.

Architects should explicitly address integration issues from day 1. As already mentioned, I recommend to place integration issues as high-priority requirements into the backlog. Use cases and sequence diagrams help determining functional integration (the interfaces) as well as document/data transformation necessities (the parameters within these interfaces). During the later cycles in architecture development, operational issues can also be targeted with respect to the integrated parts. Last but not least, architects and developers need to decide which integration technologies (SOA, EAI, middleware, DBMS, ...) are appropriate for their problem context. Integration is an aspect crosscutting through all your system!

Agile processes consider integration as a first-class citizen. In a paradigm that promotes piecemeal growth, continuous integration becomes the natural metaphor. From my perspective, all software developments with high integration efforts must inevitably fail when not leveraging an agile approach.

To integrate is agile!

Friday, October 03, 2008

Trends in Software Engineering

It has been a pretty long time since my last posting. Unfortunately, I was very busy this summer due to some projects I am involved in. In addition, I enjoyed some of my hobbies such as biking, running and digital photography. For my work-life balance it is essential to have some periods in the year where I am totally disconnected from IT stuff. But now it is time for some new adventures in software architecture.

Next week I am invited to give a talk on new trends in software engineering. While a few people think, there is not more to discover and invent in software engineering, the truth is that we are still representatives of a somehow immature discipline. Why else are so many software projects causing trouble? I won't compare our discipline with other ones such as building construction because I don't like comparing apples with oranges. For example, it is very unlikely that a few days before your new house is built, you're asking the engineer to move a room from the ground floor to the first floor. Unfortunately, those things actually happen in software engineering on a regular basis.

A few years ago I wrote an article for a wide spread german IT magazine on exactly the same issue. I built my thoughts around the story of a future IT expert traveling back with a time machine to our century. When he materializes, a large stack of magazines falls on his head - unintentionally - and he cannot remember anything but his IT knowledge. How would such a person evaluate the current state of affairs in software engineering? Do you think, Mr. Spock from spaceship Enterprise will use pair programming or AOP? Wouldn't look very cool in a SciFi series, would it?

To understand what software engineering is heading for in the future, it is helpful to understand that all revolutions always are rooted in continuous evolution. At one point in time, existing technology cannot scale anymore with some new requirements which forces researchers to come up with new ideas. Mind also the competition challenge. The first one to address new requirements may be the market leader. Learning from failure is an important aspect in this context. So, what is currently missing or failing in software engineering? Where do we need some productivity boosts? An example for such new requirements might be new kinds of hardware or software. Take Multi Core CPUs as an example. How can we efficiently leverage these CPUs with new paradigms for parallel programming.

As another example I consider the creation of software architecture. In contrast to many assumptions, most projects develop their software architecture still in an ad-hoc manner. We need well educated and experienced software architects as well as systematic approaches to address this problem of ad-hoc development. Especially, the agility issue is challenging. Software engineers need embracing change in order to survive. Architecture refactoring is a promising technology in this area, among many others, of course.

Another way of boosting productivity is to prevent unnecessary work. Here, product line engineering and model-based software development are safe bets. If the complexity of problem domains grow we cannot handle the increased complexity with our old tool set, We need better abstractions such as DSLs. And I simply cannot believe for the same reason that in a world with an increasing amount of problem domains, general purpose languages are the right solution. Wouldn't this be another instance of the hammer-nail syndrome? Believe me, dynamic and functional languages will have increasing impact.

In a world where connectivity is the rule, integration problems are inevitable. In this context, SOA comes immediately to my mind. With SOA I refer to an architecture concept, not to today's technology which I still consider immature. New, better SOA concepts are likely to appear in the future. SOA will evolve such as CORBA and all other predecessors did. Forget all the current ESB crap which already promises to cure all world problems.

There is a lot more which will influence emerging software engineering concepts. Think of grids, clouds, virtualization, decentralized computing! Look at CMU SEI's document on Ultra-Large Scale Systems Linda Northrop was in charge of. You'll find there sufficient research topics we have to solve before ULS will be available.

For us software engineers the good news is that we are living in an exciting (although challenging) period of time.

Thursday, August 28, 2008

The Silver Bullet

Frederick F. Brooks brought up the concept of silver bullets. Of course, Frederick was not refering to some weird persons who are turning into computer geeks during full moon and can only be stopped using these nice little silver bullets. Instead, he claimed that there never ever will be any software technology that can boost software development productivity by a factor of 10 or more. So far, he has been right. Object-Orientation? Really productivity-boosting, but not to an order of magnitude. Models? Nice try, but someone has to build the model and the generator.

But won't there be any technology in the future that can refute this thesis? Suppose, an alien lifeform with incredible technology is visiting planet earth. How would they design software systems? Do we really believe, they would leave their giant space ship with a notebook, start modeling their systems with a kind of UML tool, and program in Java?

Thus, could the Silver Bullet be a universal law, or just a kind of constraint only humans are facing due to their limited capabilities?

Frederick F. Brooks also brought up the concept of accidental versus inherent complexity. Basically, each problem has an inherent complexity. You can't specify a a linear algorithm for the general sorting problem. Forget it, there is really no way! But we ourselves are causing a lot of problems by accidental complexity. Using an array for storing a sparsely populated 1000x1000 matrix isn't a very smart approach, right?

No technology can get rid of inherent complexity, but it could hide this kind of complexity from the user. Hiding complexity is one way to increase productivity - just make it a SEP (Somebody Else's Problem). Helping to address accidental complexity is another. Consequently, the best productivity boost results from combining both.

According to philosopher Thomas Kuhn, evolution of technologies is more a linear process. A technology can only scale upon specific limits. If it reaches the limits, workarounds will help postponing the necessity for a new approach. But at some point in time, a completely new and revolutionary approach will appear (such as switching from structured programming to OOP) and the same kind of technology cycle will restart. It is a kind of spiral model if you will.

The only point where we could observe productivity boosts are the technology revolution events. However, new technology revolutions are mostly rooted in existing technologies. Thus, an immediate productivity gain is simply not possible.

But as a thought experiment: if you just compared the way of approaching software development challenges now and 40 years ago, you could recognize a tremendous increase of effectiveness. There is a long way from software engineering in the sixties to that of today. No person could have skipped all the intermediate results and challenges.

Thus, if an alien race would land on earth, it appears very likely they could introduce us to new ways of building software systems that offers magnitude of productivity increase.

Conclusion: Silver bullets are theoretical beasts only existing in the Star Trek Universe. As pragmatic architects we must leverage the tools and technologies existing today. And we shouldn't believe all those technology panaceas vendors and market analysts are constantly promoting such as SOA, SaaS, you name it.

Tuesday, August 12, 2008

Now it is proven - NESSI exists!

ok, but what is it?

According to its web site, "NESSI is the European Technology Platform dedicated to Software and Services. Its name stands for the Networked European Software and Services Initiative."

Yes, it is not very clear from this definition. So, let me try my own explanation.

Suppose, you'd like to leverage SOA to connect heterogeneous islands. Maybe, you are going to build a healthcare system that connects different international regions, allowing physicians to access your healthcare data if required and permitted. An underlying SOA infrastructure should provide:

- SOA based middleware and process modeling

- Enterprise Application Integration facilities

- a kind of internet bus that supports various protocol families

- registries and repositories

- horizontal services such as security

Sure, you can obtain such ingredients, if you accept vendor lock--in. I don't list all the big SOA proponents here as they are quite obvious. The truth is that is almost impossible to cope with such heterogeneity. If you try, you will be soon overwhelmed by sheer complexity.

Unfortunately, there is another dimension to this problem:

- Even if you have all these facilities at hand, you need to standardize the vertical domains. What if the whole SOI (Service Oriented Infrastructure) is standardized but two banks don't agree in what a customer or bank account is?

So far, solutions have either attempted to address the technology issue or to address the domain standardization.

What NESSI tries is to address those challenges.

It is required to create a kind of standardized SOA operating system and to define domain-specific standards for how to create applications on top of this platform. From my personal viewpoint the combination of Open Source Software with standards would be a perfect fit. It is necessary to create both, standardized APIs and a reference implementation able to handle mission critical applications.

Is a platform sufficient? Absolutely not, because we also need to cope with service engineering and governance issues and non functional requirements and tools. Consequently, NESSI is also trying to address these points.

All of these aforementioned problems are hard to solve but on the other hand we have to solve them anyway, because they root in inherent complexity. If we need to solve them to finally live the dream of "the network is the computer", then NESSI represents an excellent opportunity.

But that's my personal viewpoint. What is your's?

Michael's Event Calendar

- In September I will visit my colleagues in Bangalore and give a seminar on Distributed System Architecture.

- On 14th October I will give a keynote on COMPARCH 2008 in Karlsruhe. I was invited by Ralf Reussner and Clemens Szyperski, two well-known gurus on architecture and components. Guess, what topic I will cover? Yes, architecture refactoring, my preferred topic these days.

- Originally, I planned to attend this year's OOPSLA conference in Nashville. I have two accepted tutorials, one on high quality software architecture, the other one on Software Architecture Refactoring. Unfortunately, I am so busy within Siemens that I won't make it to the OOPSLA. However, an excellent member of my staff will give the two tutorials instead. Marwan is not an emergency solution but an excellent architect himself, formerly working for Lufthansa Systems. You will have a lot of fun attending our two tutorials. Mentally, I will be there, of course :-)

- In November I will most likely be invited to the Microsoft TechED in Barcelona giving two talks.

- In December I will be available on a panel at the Service Wave Conference of the European NESSI initiative.

- In January 2009 there is my local event, the OOP 2009 in Munich. I don't yet know what topics I will address but be sure I will be around :-)

- I am planing to submit for next year's ACCU in Oxford (April).

Mojave - Episode II

Did you hear about Microsoft's Mojave Project where they told a bunch of selected people - most of them Vista critics - of a cool new operating system called Mojave? Supposedly, most of them were kind of enthusiastic about Mojave. To their big surprise, Mojave turned out to be Vista - the devil in disguise if you will in remembrance of an old Elvis hit.

What can architects learn from this?

- First of all, we can hide all the mess of our systems behind a nice look and feel. The best software system will not be accepted if its usability sucks, and vice-versa.

- Secondly, if we got an excellent system that people for some strange reasons don't appreciate, we could try to put a new API or Look & Feel on top. Maybe, the interface is the only thing that sucks in our system.

- Last but not least, we could also learn that nice architecture and design diagrams might impress others so much that they consider the system as a neat piece of design while in fact the implementation is totally different from the design.

Regarding the original Mojave experiment I really doubt that the reviewers had the write level of expertise and mind set. Don't judge a software system by its cover! Review and evaluate the design and the implementation thoroughly.

Architects shouldn't just believe but rather evaluate and enforce.

But of course, in some stakeholder presentations such as presenting the system to your top management you can add a marketing layer while remaining politically correct and honest.

Monday, August 11, 2008

Art, Craft or Science?

Is software design an art, a craft or a science? This question has been the starting point for many entertaining discussions. And many different opinions and answers exist.

So what is my take on this?

To build a software system requires engineers to come up with a mapping that takes the problem and maps it to a concrete solution. Requirements describe the problem and the desired properties of the solution. Unfortunately, the mapping typically is not unique in that there might be many solutions solving the same problem. With other words, architects and developers face a lot of freedom. The bad thing about freedom is that it always forces architects to take decisions. In other words, to architect is to decide.

Of course, we appreciate if we can immediately find an appropriate solution for all problems, just following a divide-and-conquer strategy of software development. But this never happens in practice.

Two problems come to my mind in this context:

- Sometimes we encounter problems for which we neither know a solution nor can find a solution elsewhere (finding a solution means either knowing a direct solution or being able to map the actual problem to another problem for which you already know a solution - for example, if we are able to map our algorithmic problem to a sorting problem.)

- In contrast to mathematics, the problem itself is a moving target in software engineering. Pantha rhei.

The first issue implies that you may not find a pattern or some other kind of best practice for your concrete problem context. Instead, you need some intuition. Hopefully, you recognize that the problem at least resembles another kind of problem for which you already have a vague solution idea so that this can serve as a good starting point. By the way, this is the reason why it turns out to be so hard to build a general problem solver for software engineering. Freedom is very hard to deal with by a general problem solver.

The second issue, coping with change, requires some sort of agility. In contrast to logic, our axioms keep changing while we are applying the calculus. The only way to deal with change is to embrace change everywhere, in the process, in the tool chain, in designing, in testing, in refactoring, in programming, in communicating.

What makes software design so hard sometimes is when both problems appear in parallel.

When people ask whether software engineering is a craft, a science, or an art, the answer should be obvious now. It is a science as we try hard to follow formal approaches where feasible. It is a craft because solving problems often requires experience and best practices. And it is an art as software engineering leaves enough freedom for intuitive and even artistic solutions.

I guess this is what makes our job so interesting.

Friday, August 08, 2008

Architect always implements

When I once invited Bertrand Meyer to our location in Munich, he gave an excellent talk on Software Engineering. His most memorable messages were "Bubbles don't crash" and "All you need is code".

With the first message "Bubbles don't crash" Bertrand referred to all those engineers who have turned into Powerpoint or UML freaks. A Powerpoint slide or UML diagram will never crash (if you don't use it as a model for code generation, the MDSD guys might argue). It is surprisingly easy to impress people with simple boxes and lines (sometimes dubbed software architecture). Especially it is rather simple to impress top management which is why the wrong consultants can potentially cause so much damage. Some might respond that high abstraction levels are the only way to understand and build complex systems. That's certainly true. On the other hand, you would not be able to build a ship if you never had any idea about water. The same holds for software systems. It is simply not possible to develop a software system without any idea on top of which runtime environment it is supposed to execute.

Even if you had in-depth knowledge of your software system's runtime environment and built an excellent software architecture, it is NOT a no-brainer to transform a software architecture to a working implementation. That's why Bertrand introduced the "All you need is code" attitude. What helps you the best theory, if you can't turn it into practice? As an architect you need to have implementation skills, either for implementing parts of the system yourself or for assessing the results of other team members. How could you ever build a good bicycle without biking yourself?

The problem arises how to ensure that we as software architects can meet these requirements. "Architect always implements" means that you should also implement in a project, but definitely not on a critical path. By implementing you will earn the respect of developers, while keeping in touch with the implementation at the same time. And you will have to eat your own dog food. Whenever something is not as expected you can detect the problem and help solving it. However, in some projects architects are busy due to communication, design, and organization efforts so that spending time for implementation will be almost impossible. Never say impossible in this context, do not even think it! Even in such scenarios I recommend spending some of your time for code reviews and some of your spare time to get familiar with software technologies being used in your project context. Otherwise you will create kinds of Software Frankensteins: creatures you are not proud of and who tend to live forever unless not eliminated in an early stage.

Architects are not just masters of Microsoft Office and of a bunch of drawing tools they leverage to create design pearls, throw them over the fence to the developers who ought to build a perfect implementation, while the architects enjoy their holidays on a nice Carribean island.

Be pragmatic! I guess, the Pragmatic Programmers book series was created for exactly the same reason; to make us aware of the relevance of practical experience.

Never ever forget: ARCHITECTS ALWAYS IMPLEMENT. There is no exception to this rule.

Saturday, July 26, 2008

Pseudo Effectiveness

Do you know these co-workers who are always answering e-mail in the middle of the night, who are in their offices until midnight, who have so many contacts to customers, who have an infinite list of activities, who have their mobile phone directly integrated into their body, and who always tell you about the big work overload they have? All those unknown heroes who always sacrifice their spare time on the altar of the god of infinite work?

Of course, many of us in the IT world are more busy than it proves to be healthy or effective. But occasionally people only claim to be active and involved, while in fact they are not. Unfortunately, it is not easy to differentiate between actual effectiveness and that kind of pseudo effectiveness. Sometimes, the one who does the job gets not promoted while the one presenting beautiful slides about the work results of others is. Of course, you may also refer to the Peter principle in this context :-)

Why do I discuss pseudo effectiveness in this architecture blog? As an architect, you might experience high impact if there are people with high pseudo effectiveness involved in your projects. Think about outsourcing sites where you have the feeling that productivity is very low. Think about developers or architects who have the same skill level, but whose work results are completely different in terms of quantity and quality. Also think about job promotions where you got the gut feeling of being ignored while in fact you did an excellent job in contrast to this other guy.

But be aware of the fact that all of us are somehow guilty of pseudo effectiveness. A little chat with co-workers in the cafeteria, some daydreaming in front of the workstation, some Internet surfing. All this may count up to a significant amount of time during the day. But as you might remember from my previous posts breaks are important. I claim that productivity significantly drops when ignoring such kind of work-life balance.

How can you detect real pseudo effectiveness? The only way is by analyzing actual productivity. For which tasks has the person been responsible for and what are the actual outcomes? In this context, the goals should be defined in such a way so that there is no space for pseudo effectiveness. Someone who needs a week to write a simple letter may seem very active, while in fact being very ineffective and inefficient.

Friday, July 25, 2008

Design Pearls

What holds true for building construction, is also applicable to software development. But software engineers don't consider themselves just as craftsmen, but also as artists. We strive for architectural beauty and aestethic design, not being satisfied with functional aspects.

While architecture beauty is an important achievement, improving qualities such as expressiveness, simplicity or symmetry, design pearls define the other extreme.

By design pearls I am referring to masterpieces of software design which don't stop just meeting the requirements, but also add unnecessary burden (or ornaments if you will) to the system.

While design pearls show the mastership of one single individual they are counterproductive for team development as they decrease simplicity (there are more artifacts than necessary for the given task) and symmetry (they don't follow the KiSS principle but try to constantly reinvent the wheel).

We can avoid design pearls by strictly following a requirements-driven approach. All architecture concepts in a system must be rooted in a concrete requirement. In addition, we should use patterns where useful. A pattern is a "complete disregard of originality". It just helps reusing common wisdom, instead of coming up with new solutions. And we should strive for architecture quality indicators such as simplicity, expressiveness, orthogonality and symmetry. One way to aim for symmetry is to introduce design guidelines from day 1. Instead of letting developers figure out how to cope with cross cutting concerns such as exception handling, it is better to give them a framework of rules and guidelines.

In summary: design pearls are really nice to look at but ugly to change and extend.

Sunday, July 20, 2008

An Anecdote on Architecture Training

Recently, I gave an architecture training within Siemens. There was another Siemens seminar in the same hotel dealing with project teams. In one of the breaks, a guy asked me whether I knew more details about this architecture seminar. He could not believe, that someone within Siemens was teaching on building architecture. I explained to him that the seminar was actually covering software architecture, not building construction. He asked me whether I could recommend the seminar. "Yes, absolutely", I said to him, "to be honest, I am the speaker". What can we learn from this: be cautious if you tell someone you are an architect.

Wednesday, July 16, 2008

Stop those Control Freaks

Suppose, you are in a meeting with other people. You need more information on the Hubble constant and ask the audience for details. Someone (maybe a friendly physics professor) comes to the white board and explains the theory.

Hmmh, how does that relate to software architecture? If you think about the situation, you'll recognize that the solution is based on a decentralized approach.

Normally, we are more used to centralization. Maybe, we are even addicted to all those centralized concepts. There is a central directory server, a central hub in the EAI solution, a central database, ... But as the example illustrates, sometimes decentralization is the better choice.

In the real life example we have used a P2P concept by leveraging broadband communication - ok, you just spoke very loud so that everyone paid attention to your question. Is this the only way to implement decentralization? No, there are several other alternatives.

For example, Swarm Intelligence: Suppose, I can't remember where I have parked my car. To increase search speed, all family members will visit different areas to locate the car. All searchers carry mobile phones to communicate with each other. After my wife found the car, she tells everyone where it is.

Another example could be Ad Hoc Networking: In the nearby forest, I find an interesting bird. I tell other people approaching my location about the bird who themselves tell other people who ... After a short period of time the scene is crowded with interested people.

Decentralized thinking means getting rid of central nodes or central controls. This might increase fault tolerance, but decrease efficiency. Often centralized concepts are mixed with decentralized ones. For example, there might be registries where all possible peers in a P2P network are known.

From an architecture viewpoint, several patterns support decentralization. For instance, the Lookup/Locator pattern, Blackboard architecture pattern, or the Leader/Followers pattern.

One of the qualities we can get from a decentralized system is emergence. The system as a whole reveals smart behavior that is more than just the combination of all nodes. Think of swarm intelligence like in an ant population.

From my viewpoint, decentralization is still neglected in software engineering, especially in software architecture. And as the examples shows, real life offers an abundance of decentralized concepts.

Monday, July 14, 2008

Architecture Design - How deep should it go?

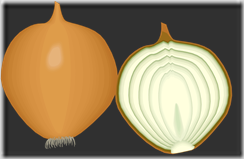

In my last posting I introduced the Onion model for architecture design. Often it is very difficult to determine when architecture design stops and fine-grained design begins. Thus, I am going to illustrate some of my thoughts.

Before you start the most important precondition for architecture design is the availability of

- the business goal of the software architecture

- a subset of all requirements (15-30%), but make sure the most important ones are already available

- a coarse-grained test strategy.

There are different recommendations for architecture design. First of all, I consider it essential to differentiate between strategic design and tactical design:

- Strategic design is about all those requirements that have a systemic impact on the whole software architecture. Basically, these are the inner layers of the onion. All architecture decisions that do not only reveal a local impact on a component together contribute to architecture design.

- Tactical design is simply about the rest: all decisions that show only local affects. Interestingly, variability issues often denote tactical design issues, even in product lines. Things like exchangeability of an algorithm only imply, we need to open our strategic architecture to support variation of the particular family of algorithms (a.k.a. strategies).

This definition comes close to what Grady Booch once meant by "software architecture is about the important things".

Another consideration consists of reducing the number of abstractions. This is what I call the Pyramid model:

- the top of the pyramid is the system itself,

- the middle layer represents all subsystems,

- the bottom layer comprises all components the subsystems consist of

For architecture design we should only follow the pyramid model. All abstractions underneath the bottom layer of the pyramid are part of fine-grained design. Note, that it might be subject to your own definition how the system, subsystems, or components of the pyramid model map to the concrete abstractions in your domain. This might look different between an embedded system and an Enterprise SOA system where subsystems might become services.

All architecture decisions should be completely rooted in requirements and risks. All extra add-ons increase complexity and thus decrease simplicity and expressiveness.

Don't be caught by the trap that architecture is only about problem domain and not about solution domain although you always should strive for keeping your architecture as independent from specific technical issues as possible (always try to defer all technology decisions to a later phase). "Hard" requirements such as "cycle time must not exceed 1 msec" or "use of SQL Server 2003 is mandatory" will be treated as first class requirements. And those requirements may also have systemic impact, thus contributing to strategic design.

In the documentation of your architecture follow a newsletter style. This implies, each artifact (system, subsystem, component) should be explained using a newspaper-like article. Architecture design is just that and no more.

In addition to architecture design you should:

- specify design and architecture guidelines for all crosscutting concerns. This is best done before architecture design for all important systemic requirements. For other concerns you might create guidelines in parallel to architecture design.

- create technology prototypes for all important risks. For example, if you need to guarantee 2000 transactions per second, make sure your middleware of choice really supports this requirement.

- refine the test strategy you should have established after requirements engineering.

These rules of thumb are intentionally somewhat vague. Again, I'd like to refer to Grady Booch in this context: "there is no cookbook for software architecture".

Fortunately, there are some good practices to share.

Thursday, July 03, 2008

The Onion Model

If you like to sow

an onion, you need some preparation. It is important to take care of the right environment and also to think about the requirements an onion needs to grow and what risks you might encounter. From a business perspective, you should also consider what type of onion you like to harvest, but that's a different story.

an onion, you need some preparation. It is important to take care of the right environment and also to think about the requirements an onion needs to grow and what risks you might encounter. From a business perspective, you should also consider what type of onion you like to harvest, but that's a different story. The inner core of the onion will be the domain model including all core components, their relationships and interactions.

For the next layers you will need a prioritized list of strategic requirements. These requirements influence systemic architecture decisions. For example, reliability, availability, or security. All of them represent operational requirements that result in specific infrastructures. If performance is the most important operational requirement, you will build a performance layer around the domain logic core. With other words, the domain logic will be embedded into the performance infrastructure. Then you turn to the second most important operational requirement. If security is the number two requirement, your existing onion that consist of the functional core embedded to an performance infrastructure will get wrapped by a security layer. This continues until your architecture is stable.

At the end you need to address tactical issues such as flexibility or maintainability. These will become the outer layers of the onion. For some of them, you will need open up your strategic architecture, for instance, by providing hooks.

Eventually, you'll get an "onion" which was designed with a functional core in mind. All important requirements had an higher impact on the onion than the less important ones which was ensured by priorization of onion layers.

Monday, June 23, 2008

Focus matters

To prevent such problems there are different means. One of the most important one being requirements traceability. Each architecture change must address a requirement and/or a business goal. You should also clearly trace back the architecture decision to the requirement or business goal in your documentation. Architecture review methods such as ATAM use this information to figure out tradeoff points, sensitivity points, risks and chances of your architecture.

In the optimal case there will be patterns available to support your architecture design. For orthogonality you should apply the same patterns for the same problem contexts. Likewise, relevant cross-cutting concerns such as error management should be explicitly addressed by architects and enforced through guidelines.

Note, however, that architects may come up with the best architecture or the most consistent and complete guidelines, but will fail if the implemented architecture differs from the envisioned architecture. Thus, architects should walk around and communicate to enforce the architecture vision.

Just staying in office, throwing the architecture over the fence, and hoping everything will be in place after a while, is the best receipt for project failure.

As change is the rule and not the exception, architects must always ensure that any change in the set of business goals or requirements will lead to an appropriate architecture refactoring. With other words: if the focus may change and you need to stay in focus, then you'll have to change if the focus actually changes.

Friday, May 30, 2008

There is no business like office business

In the last weeks I enjoyed small talk with several software engineers from various companies. Topics included technology and architecture, but also office work, management, colleagues and career paths. And several times I heard from companies where a couple of characteristic metrics for measuring engineer productivity exist. Let me exaggerate a little bit:

- One of the wide spread metrics is office availability. I am speaking about engineers sitting in their offices or cubicles from early in the morning until late in the evening. This is something I fully understand for projects being in critical phases or delayed. Of course, I also did it and will do it in the future. But I also heard about engineers staying in their offices very late to send an implicit message to their management. Something, like "Look, I am working so hard for the company. If you don't promote me, you should suffer from remorse." However, the truth according to several studies is that you cannot be productive for 8 hours or more per day, at least not over a longer time span. Even worse, if you stay in office, your bug rate will increase significantly and the results will be not better than staying in office for less time. I know the stories about employees in Silicon Valley or Redmond sleeping near to their office PC. There is a reason why eXtreme Programming does recommend reducing work time to under 40 hours per week. And all of you believing in 16 hour work days, no matter wether you are good (engineer) or evil (manager): productivity in such overload situations is not possible. You either have many simple tasks or spend significant time twiddling your thumbs. Today, I read an article that an increasing number of IT workers suffers from the burn-out syndrome or other diseases which are caused by work overload.

- Another wide spread metrics is internal visibility. Do you remember Bertrand Meyer's "bubbles don't crash"? Management tends to rather promote engineers who spend significant time presenting and chatting to management. If you don't provide a steady stream of good news (even if you invented this stream of good news) to the right persons, they won't even notice your existence in the worst case. Often, not the real results count but management visibility which is measured by .. guess what .. Powerpoint presentations, Word documents and Excel sheets. Don't forget that e-mails and meetings represent additional, important means in this context. Considered from this perspective, virtual reality has already conquered the world.

There are a lot of lessons we can learn from these statements.

- First of all, Work/Life balance may be a buzzword, but from a useful one if you ask me. For example, if I recognize that my productivity is dropping to almost zero, I am leaving office, go for running or biking, and then continue with my work, often until late in the evening. Such extended breaks provide me with a significant productivity boost. It might appear unbelievable, but I am definitely more productive that way than if I had spent the breakout time for office work.

- If I need to concentrate I will also work at home from time to time rather than spending the time in the office. With my phone constantly ringing, colleagues dropping by, and other continuous interrupts, offices don't really provide an adequate environment for creativity and brain workers. Ok, I am missing my colleagues and the social interactions after a short time. Thus, home office is the exception. Fortunately, there are so many opportunities (phone conferences, meetings) to interact :-)

- Effectiveness and efficiency are/should be the only things that count. It does not matter when or where you created your results. However, you need to make sure, managers are aware of your work and what you achieve. Marketing your own person is an important issue in a universe where managers can't afford tracking all work results from all engineers. If you coach some colleagues as a mentor and if you promote their visibility and career path, inform your managers. Improving skills of co-workers and other more indirect results offer high business value to a company.

Interestingly, all these points are also valid for architects. However, home days are often a bad idea in those hot project phases when creating or enforcing software architecture. For an architect, communication represents the most important instrument. Communication works best in Face-to-Face meetings. But, nonetheless, also in these situations you need to plan your recreational breaks to enforce Work/Life Balance.

Monday, May 12, 2008

Developer Usability

In the last months I've seen a lot of frameworks, platforms and libraries which revealed a substantial lack of usability. Developers reported to me that using those APIs was more a nightmare than a pleasure. Some of them just stopped using these artifacts, others built their own workarounds on top. When asking the API-developers for their comments, it turned out that most of those APIs have been built without any consideration of usability. In addition, adequate documentation was missing very often, which of course is also related to usability. Interestingly, I often hear the same complaints from end users. The UIs of most applications or devices are a pain. For instance, I am using a SAP-based system for ordering work items. What I need to fill in in all those fields is by no means self-explanatory. Help is missing or inappropriate. Error messages don't give any guidance what could be wrong. Suppose, shops like Amazon would come up with such UIs. How successful, do you think, would they be? Whenever we are building such systems we need two things:

- a clear understanding of the domain

- a tight interaction with users to ensure usability

For example, if we read the book by Krzysztov Kwalina and Brad Adams on how to design frameworks, you will find the recommendation on scenario-based design. Find out how end users could best use the system you are going to build in terms of user scenarios. Implement the API to support the most important and common scenarios. After the first prototype/iteration of the system is available, ask the target audience to use it and give feedback what they like or don't like. Then improve it!

In contrast to common belief, architecture is not only about functional requirements and operational qualities. It is also about usability. A system that meets all requirements but is not usable, is of no value for its users. As an example: I owned Rio and Creative MP3 players which provided an incredible amount of features, but lacked usability. After a while I switched to Apple IPods which have less features and are significantly more expensive but offer a great user experience.

At the end, you should strive for making the end user's life a pleasure. In this context, just remember that you yourself are using many more systems than you are developing. Something similar is written in many public restrooms within Germany: Leave the room in such a way you'd like to discover it.

Friday, March 14, 2008

On vacation

Monday, February 25, 2008

DSLs revisited

As a lazy person, I will not bore you with my own definition but just cite what Markus Voelter once said. In a former posting Markus defined a DSL as follows:

A DSL is a concise, precise and processable description of a viewpoint, concern or aspect of a system, given in a notation that suits the people who specify that particular viewpoint, concern or aspect.

Given that definition, UML is a vehicle to define DSLs. Take use case diagrams as an example. However, UML might better suit software engineers.

An ADL (Architecture Description Language) like Wright is a formal language to describe architectural designs.

BPEL or ARIS are DSLs to describe business processes. While BPEL is much more system centric, ARIS can also be understood by business analysts.

Thought questions:

- Is a programming language such as Java also a DSL?

- And what about natural language?

DSLs are useful to describe a viewpoint, concern or aspect of a system. But, what means do we have to introduce our own DSLs?

- Meta data annotations (Java) or attributes (.NET) can serve as the core elements of a DSL. Take the different attributes WCF (Windows Communication Foundation) introduces to help describe contracts.

- UML provides a meta model for introducing DSLs as well as specific DSLs (the different diagrams to specify viewpoints).

- XML can serve as a foundation for introducing DSLs. Example: BPEL, WS-*, XAML.

- Programming languages like Ruby or Smalltalk let you intercept the processing of program statements, thus enabling DSLs. Example: Ruby on Rails.

- Integrated DSLs like LINQ are embedded into their surrounding language.

- APIs define a kind of language.

- The hard way might be designing your own language using plain-vanilla tools such as ANTLR. Note: It is not only the compiler or interpreter but also the run-time system we have to cope with.

According to the Pragmatic Programmers designing a DSL can be very effective.

- It may help you visualize and communication the viewpoint, aspect, concern the DSL addresses.

- It may enable you providing a generator that generates significant parts instead of forcing you to hand-craft. This is not restricted to code but you could also generate tests or documents.

Stefan Tilkov provided an excellent example. Suppose, you are developing a Java application. Writing the unit tests in JUnit would be the normal way of operation. But, maybe JRuby would be a much better way to write unit tests. As both run on top of the JVM, this can be achieved easily. In this context, we use JRuby+xUnit APIs as our DSL.

I believe, DSLs help climb over the one-size-fits-all barrier traditional programming languages introduce. Creating a DSL does not need to be as complex as defining a programming language. Using XML Schema or Meta data annotations or UML Profiles are nice examples.

Saturday, February 23, 2008

Generative versus Generic Programming

Andrey Nechypurenko, a smart colleague of mine, recently brought up an excellent thought. If we already know a domain very well, why should we bother building model-driven generators? Couldn't we just provide an application framework instead? Indeed, each framework introduces a kind of language. It implements commonalities but also hooks to take care of variability. So, when should we use model-driven techniques and when should we better rely on frameworks?

My first assumptions were:

- A DSL is much closer to the problem domain which helps different stakeholders to participate establishing the concrete model. If stakeholder participation is essential, than a DSL might definitely be the better way.

- DSLs are (often) more productive. It is much more verbose to program against a framework than designing using a DSL. Needless to say, that we need to take the efforts for providing the generator into account.

At least in my opinion the answer could also be to use both, a framework that captures the domain's core abstractions and a generator that generates applications on top of this framework.

Any thoughts about these issues?

Monday, February 18, 2008

Relativity

Today, I had an inspiration. My spouse told me it will take 5 minutes to get ready for leaving home, but actually it took her over 20 minutes. As those "events" constantly happen, my assumption is that women are moving with much faster speed than men. This way, 5 minutes on their spaceship equals 20 minutes of our time.

I also remember a professor at my university asking a student about the "evaluator problem". The student did not really understand the question. Do you? Actually, the right answer turned out to be that he was using C style malloc for allocating storage in his compiler's attribute evaluator.

What can we learn from this? Humans might have a completely different perspective on reality depending on her/his background and context. Whenever we are dealing with architecture design, we'll have to communicate with all those stakeholders. In order to prevent misunderstandings like the ones illustrated above, we need to introduce a kind of formal language and a common scale - with other words: a common understanding.

One of the most critical points in design is the consideration and elicitation of requirements. This also holds for requirement priorities. For example, a project manager might be more interested in partitioning the architecture so that the different teams can develop in the most effective way, while an architect will follow a completely different rationale for modularisation.

In many projects I was also surprised by the fact how important a common project glossary is. Otherwise, people will interpret the same terms differently which is not a big boost for effective communication. For example, in one SOA-based project senior managers considered process monitoring to reveal the business perspective (Business Process Monitoring). They were interested to observe the number of successful business transactions or cash flow. What they actually got from development was system monitoring functionality such as showing load, bandwidth or faults. Guess, how long it took development to correct this?

It is very likely, that you also experienced the architecture/implementation gap in your career. This inevitably occurs whenever architects throw their design specification over the fence and then leave the development team alone. Did you ever read a specification and understood it without any problems? Me neither! Some parts might be missing, other ones might not be implementable, and others might be contradicting. Even formal standards such as CORBA, SOAP, HTTP that were developed by expert groups within long time periods, reveal those problems. How can you then expect your own specification to be perfect? What is not perfect, will then be corrected or interpreted in unanticipated ways.

Thus, it is essential that such clarifications happen as soon as possible in a project. It is your responsibility as an architect to tackle this problem. Remember how often I already mentioned that effective communication is the most important skill - one of the few moments where I do not abide to the DRY principle. There are lot of methods and tool that explicitly address this issue. Think of agile methods, domain driven design, use case analysis, to name just a few.

Reality is relative. Thus introduce a common frame of reference for the stakeholders. This holds for software engineering, but also for real life :-)

Saturday, February 16, 2008

Workflows

Workflow engines such as Microsoft's WF (Workflow Foundation) are only rarely used these days. Most developers totally underestimate their power. However, in an integrated world where processes respectively workflows increasingly need to connect disparate heterogeneous applications, just hard coding these workflows in traditional languages such as Java does not make any sense. First of all, coding in Java or C# requires programming skills, while often business analysts are the ones in charge of business processes. And secondly, hard coding in Java, C#, C++ implies that all changes must happen in source code with the code base representing a beast containing both business logic and workflow related content.

Before covering the meat of this posting, I need to define what I understand by a workflow. By the way, I will use the terms workflow and business process interchangeably as I don't consider the differences stated by other authors very convincing.

If we just refer to Wikipedia we will find the following definition:

- A workflow is a reliably repeatable pattern of activity enabled by a systematic organization of resources, defined roles and mass, energy and information flows, into a work process that can be documented and learned. Workflows are always designed to achieve processing intents of some sort, such as physical transformation, service provision, or information processing.

Sounds rather confusing, right? Let me try my own definition.

- A workflow defines a set of correlated activities each of which representing a (composite) component that takes input, transforms it, and sends the result via its output ports to subsequent activities. Transitions to an activity may be triggered implicitly by (human or machine initiated events) or by explicit transfer of control

- Workflows can be considered as a Pipes & Filters architecture with the filters being the activities and the pipes being the communication channels between them

- All activities within a workflow share the same context such as common resources

- Workflows are (composite) activities themselves

- A workflow language is a DSL that allows to declaratively express workflows. Examples include BPEL, ARIS, or XAML as used in WF

- A workflow runtime manages the (local) execution and lifetime of workflow instances

In fact, a workflow closely resembles traditional program code with the statements being the activities.

A typical real-life example is visiting a restaurant.

- The workflow starts when you enter the restaurant and arrive at the "wait to be seated" sign.

- The waitress will now ask you whether you have a reservation.

- If yes, she will look up the reservation and escort you to your table

- If no, she will try to determine whether a table is available

- If yes, she will escort you to the table

- If no, she may ask you to come back later => stop

- The waitress will provide you with a menu

- At the same time, another waitress might offer you water

- ...

You will recognize easily that UML activity diagrams could be used to design workflows. Activities are either triggered by events or by active commands (e.g., you ask the waitress to bring you another beer which is like an event or she could ask you if you need anything else which is more like an "invocation"). Thus, you could either use such a diagram to express a workflow or maybe a more domain-specific kind of language/model such as BPEL. This language could be graphical, textual, audio-based, and so forth.

The advantage of a (domain-specific) language is obvious. It is much easier for domain experts to express the workflows themselves. Moreover, the workflow descriptions could be either used to generate the actual code or to execute the workflow in a runtime engine.

Sometimes workflow transitions depend on conditions such as "if customer is a special guest, give him a window table." These conditions however might also be subject to change. Coding such conditions in activities thus reveals the same problems as coding workflows in C# or Java. As a consequence, we should also specify such rules in a declarative fashion. This is where Business Rules Engines enter the stage. Business rules might be explicitly expressible in a workflow language or just be hidden in special activity types.

How can we retrieve the workflows in the act of architecture creation. User stories, Use cases and scenarios, help determing the workflow in a system. Which of these should be hard coded and which ones should be better implemented using a Workflow language, depends on requirements, frequency of change, required variability, and other factors, questions a software architect needs to address.

Friday, February 15, 2008

Removing unnecessary abstractions

A good architecture design should always only contain a minimal set of abstractions and dependencies. According to Blaise Pascal a system is simple, when it is not possible to remove something without violating the requirements. Again, we can consider this from an architecture entropy viewpoint. Minimalism and simplicity imply the lowest possible entropy.

Let me give you an example how a design should not look like. Suppose, you are developing a graphical editor. In the first design sketch you introduce an abstraction called Shape with functionality such as Draw(). From this base type you are then inheriting subtypes such as PolygonicShape and RoundedShape. Finally, the abstractions Rectangle and Square and Triangle will become direct descendants of PolygonicShape, and so forth.

After a while you might recognize that it does not make any sense to introduce the intermediary layer consisting of PolygonicShape and RoundedShape. You find out that in your context all concrete classes such as Ellipse, Rectangle, Triangle, ... should be directly derived from Shape, because you don't differentiate between types of shapes in your software.

By removing the intermediary layer you have eliminated unnecessary abstractions and dependencies. Obviously, this also adheres to the KiSS Principle. But why, you may ask, are we not removing Shape too? The answer is very simple: Shape introduces common behavior required to handle all concrete shape subtypes uniformly in the editor. Otherwise, we would need to introduce large switch statements, type codes or reflection mechanisms in several places of our application. Thus, removing Shape would impose a much higher entropy.

As you can surely see, determining the applicability of this architecture refactoring is not trivial, because you need to consider different layers and levels of abstractions in your design. For example, in another context it might be important to introduce intermediary abstractions such as PolygonicShape because you need to treat polygons different from rounded shapes. In this sense truth is always relative.

Thursday, February 14, 2008

Work Life Balance - Revisited

Right after each of my postings related to Work Life Balance, my inbox grew due to people asking me about how I personally keep the balance. That's the reason why I want to spend some sentences on this personal issue although it is not related to Software Architecture (al least at the first sight).

I still consider myself a workaholic on rails. Only a few years ago I used to work the whole week without too many exceptions. After some health problems, I remembered the time when I had been totally enthusiastic about sports. And then I started to go biking and running regularly. That's where the rails come in. Ok, I did too much running and got problems with my knee which is the reason why I am more biased to biking these days. Typically, I will run or bike almost every day from spring to autumn. For example, I enjoy to bike to work (10 km). On my way back to home I will often use a detour leading through a large forest and along a bike lane near the Isar river here in Munich (30-50 km). Sometimes, I leave office late afternoon and continue my work at home. If possible, I am also running which is an incredibly excellent way to relax, especially in winter when biking is not that cool (or should I better say when it is too cool). After 10-12 km which may take more than 60 minutes depending on my training intensity all problems are gone and my mind is open for new ideas.

My other hobbies include composing music, reading books, listening to podcasts, audiobooks or music on my IPod, among many other interests. Yes, I definitely got more interests than time.

My experience has taught me that sports (no matter what) is the most important way to keep your balance. So is meeting people who have no interest in any IT topics. Same for all hobbies that help forgetting about any work related challenges (formerly known as problems). I have never been as creative as when keeping my work/life in balance. The more time I spend for sports and other interests, the more productive I will be. This is kind of surprising as I always believed in the opposite statement (the more time spent, the more productivity).

Just relax!

All about Cycles

Here comes a real world example. In a management application the distributed agents in charge of managed objects (such as routers, load balancers, switches, firewalls) are accessed by a centralized monitoring application. For this purpose, the sensors offer functionality such as setter/getter functions. The monitoring subsystem inherently depends on the agents it monitors. After a while, the developers of the agent subsystem think about returning time stamps to the monitoring subsystem whenever it requests some information. But where could they obtain these central time stamps? The team decides to add time stamp functionality to the central monitoring subsystem. Unfortunately, the agents now depend on the monitoring subsystem. Developers have established a dependency cycle.

"So, who cares?", you might say. The problem with such circular references is that they add accidental complexity. Mind the implications such as:

- If there is a cycle within your architecture involving two or more subsystems, you won't be able to test one of the subsystems in isolation without needing to test the other ones.

- Re-using one of the subsystems in the cycle implies you also need to re-use the other subsystems in the cycle.

It is pretty obvious that dependency cycles are a bad thing. They represent an architectural smell. But how can we cure the problem? Can you hear the horns playing a fanfare? The answer is: by applying an architectural refactoring!

So, how could we get rid of a dependency cycle?

- Option 1: by redirecting the dependency to somewhere else. In the example above, we could introduce a time subsystem the agents use to obtain time stamps instead of adding this functionality to the monitoring subsystem. This way, we broke the cycle.

- Option 2: by reversing one of the dependencies. For example if we exchange a publisher/subscriber pull model (event observer itself asking for events) with a push model (event provider always notifies event consumer). Another example is introducing interfaces: instead of letting a component depend on another component we could introduce interfaces. This way, a component always uses interfaces and never component implementations directly.

- Option 3: by removing the dependency completely if it is not necessary. For example consider Master/Detail relationships in relational databases. Only one dependency direction makes sense here. The other one is obsolete.

Dependency Injection as provided by all those IoC Containers is very helpful to avoid dependency cycles. Even if you got no dependency cycles in your system, you should always avoid unnecessary dependencies - because they inevitably increase entropy and thus the chance of circular references.

But how can we detect such dependency cycles within our system? This is where CQM (Code Quality Management) tools enter the stage. Of course, these tools are not restricted to problems with dependencies. They are also helpful with a lot of other architecture quality related measurements.

Can we always avoid cycles? No, because sometimes they are inherent in the domain. For example, in Microsoft COM due to the usage of reference counting cycles were required in graph structures consisting of COM objects. However, one could certainly ask if the design of COM was appropriate in this respect. But that's a completely different issue.

Thus, the general guideline should always be: avoid unnecessary (accidental) dependencies, break dependency cycles!

Tuesday, February 12, 2008

Emergence

Suppose, you are going to develop a traffic control system for a rather large city. The goal is to optimize traffic throughput which could be measured by average miles of each cyclist and motorist. In a first approach, you may suggest to use a purely centralized control subsystem.

There are various problems with this task:

- In a rather large traffic infrastructure, traffic can never be controlled from a centralized component. First of all, this component would represent a single point of failure. Secondly, it would not scale or perform at all due to the sheer amount of traffic signals involved.

- The requirement of traffic optimization reveals a significant problem. Assume, that there is a main road through the city. The best way to achieve the goal could be to just set all traffic lights on the main road to green. Participants driving on side streets would then need to wait forever. That's what is called starvation in multithreading.

How could we solve those problems?

- Instead of using a centralized approach, we could rely on a completely decentralized approach. For example, each street crossing could permanently measure the traffic and adapt its traffic lights automatically to the current situation. If all street crossing act independently, local tactics might dominate global strategy. Obviously, this is not what we want. Strategy should always dominate tactics. Hence, let local decisions of street crossings depend on "neighboring" street crossings. If appropriate, we could also mix centralized control with decentralized autonomous adaptations. That basically means, that we control the overall strategy, but allow the system to automatically and locally adapt its tactics. Is it always useful to apply decentralized approaches? No. Here is a real life experiment: Take a number of people in a row each of them stretching one of their fingers. Put a large stick on their fingers. And then tell the group to systematically and gently put down the stick to the ground. They won't succeed until finally one person will become the leader and coordinate the whole action.

- We also learn from the example that we need really to address requirements with much more care, especially in systems with complex interactions. In the example above the target function should not only consider average flow but also take possible starvation into account. By the way, the traffic example could also be replaced by a Web shop and Web traffic. In this example, starvation would imply that some shoppers would have a fantastic experience while others could not receive any Web page in an appropriate response time. Guess, how often the latter ones would re-visit the shop. Wrong understanding of requirements can seriously endanger your whole business goals.

The question is how to design such decentralized systems. There are lots of options:

- Infrastructure: P2P networks are one way to organize resources so that they can be located and used in a decentralized way. Cloud Computing formerly known as Grid Computing is another cool example.

- Algorithms: Genetic algorithms help systems to automatically adapt themselves to their context.

- Cooperation models: Swarm Intelligence such as ants also illustrates how emergent behavior can substitute centralized control.

- Architecture: Patterns such as Leaders/Followers or Blackboard help introduce decentralized approaches.

- Technologies: Rules engines and declarative languages such as Prolog are useful for providing decentralized computing.

The problem with decentralized versus centralized systems is often when to use what. And in addition, to decide how much decentralized the system should be. This is not always obvious. Let me give you some examples instead:

- In a sensor network you might place many small robots to a specific area in order to monitor environmental data. These robots would be completely autonomous. Even if some of them fail, the overall system goal can be achieved.

- In a Web Crawler several threads could search for URLs in parallel, but they would need to synchronize themselves (e.g., using a tuple space) in order to prevent endless loops. This resembles ant populations.

- In a car or plane you need central control, but some parts can also operate and adapt independently such as Airbags or ESP. This is more a hybrid approach with the global strategy implemented by a centralized approach.

- In embedded (real-time) controllers (such as your mobile phone) everything typically is centralized because behavior must be statically predicted and configured.

The more co-operation your components require and/or the more determinism you need, the less a decentralized approach seems appropriate, and vice versa.